After the initial exploration of PowerShell, learning about pipelines and looking at interactive vs. scripted execution, it is time to do something useful. I have picked an example from “the real world”: an application is using a set of file-system directories including access permissions to store its data. Upon installation of this application, the person who installs the system needs to create the nested directory structure and assign access control to specific groups. And so far, the Services People have done that for every system they ever installed manually. Let’s see if there is some room for improvement here.

Setting the Scene

In a first attempt, we are going to settle for creating the file system structure required. Let’s assume we require an ApplicationData directory which itself contains sub-directories for three different types of information:

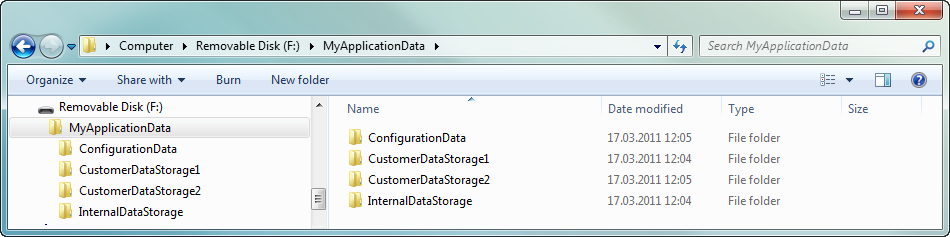

- Configuration Data: a directory which will later host the configuration data for the application.

- Customer Data: one or more directories which a customer can use to store specific information processed by the system.

- Internal Data: one directory which the system uses to store its internal data structures.

Assuming that our envisioned customer where we are going to install the software is interested in having two Customer Data Areas, the following directory structure is what we need:

With this first approach, we are also going to leave some aspects on the side (deliberately):

With this first approach, we are also going to leave some aspects on the side (deliberately):

- We are not interested in any type of configuration file, the directories and their numbers will be hard-coded!

- We are not interested in setting any access permissions at this point!

- We are not interested in creating a Windows Share for the directory structure!

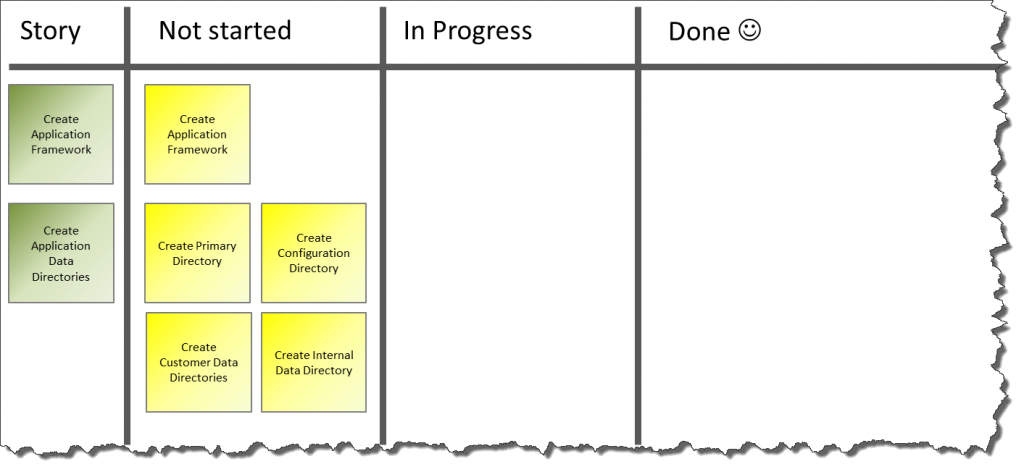

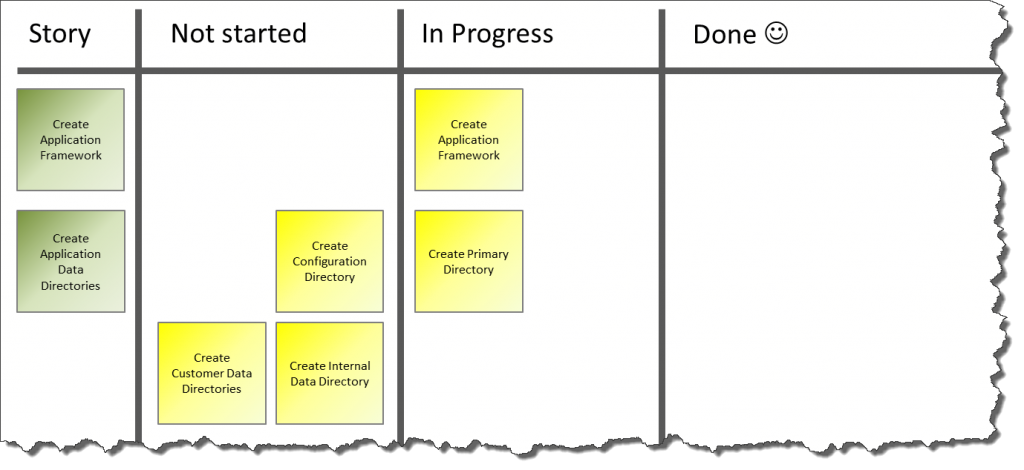

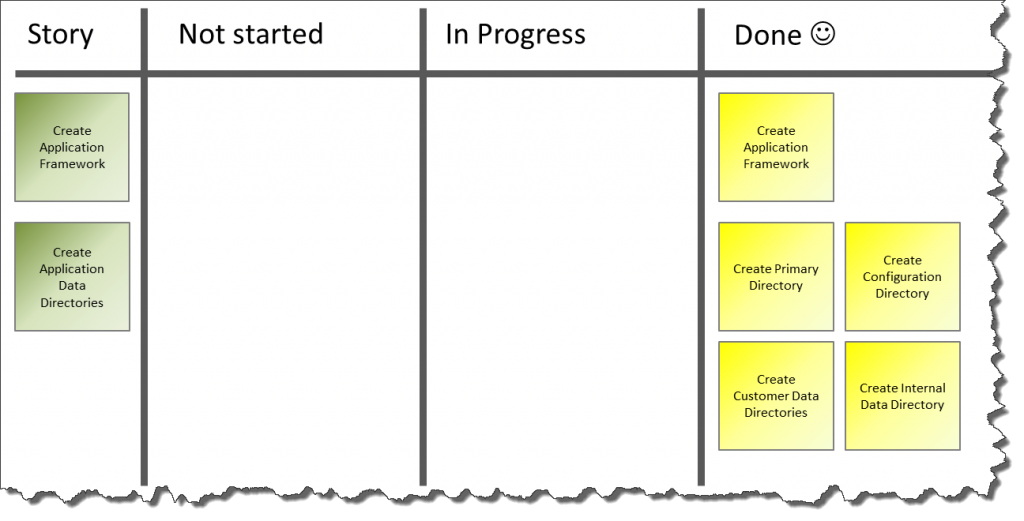

The three areas above are deliberately taken out of scope but we will come back to them at a later point in time. Our Task Board might look like this now:

Let’s see what we can make from it…

Let’s see what we can make from it…

The first Step: Application Framework & Primary Directory

Let’s get started on those two items first – the creation of the primary directory intuitively sticks our as the initial task – but requires us to also work on the application framework. Things that we have learned from doing those two tasks will help us to perform the other tasks more quickly.

Let’s use the Windows PowerShell ISE to create a new script. Here is the script code for you to copy:

Let’s use the Windows PowerShell ISE to create a new script. Here is the script code for you to copy:

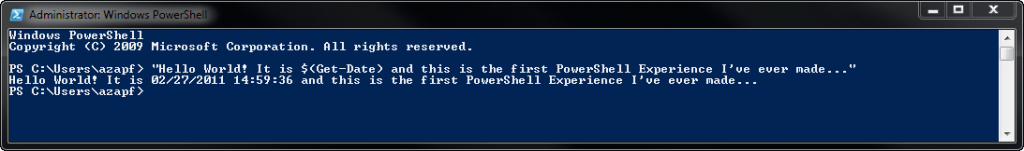

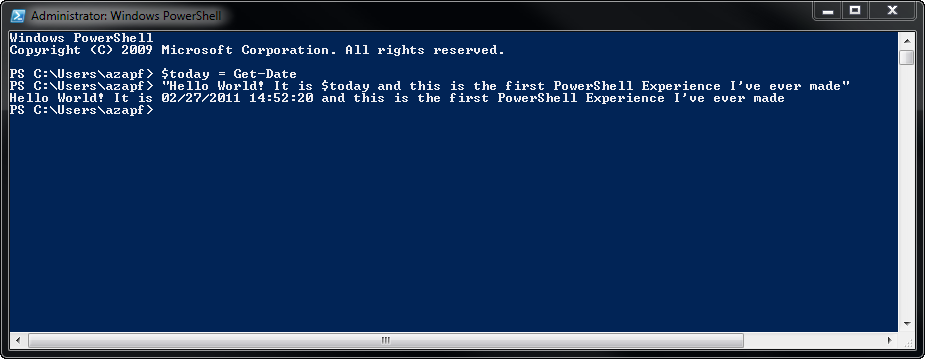

# File System Configuration Script for MyApplication # # Copyright (C)2011 by Andreas Zapf # # Please feel free to re-use and adapt as required :) # Global Variable Definitions $TargetDrive = "F:\" $PrimaryDirectory = "MyApplicationData" # Create the primary directory New-Item ($TargetDrive + $PrimaryDirectory) -type directoryAnd now let’s look at what this does: first, we are creating a variable $TargetDrive which is set to F:\. The idea here is to separate the actual drive letter from the directory names to allow an easy switch to a different drive where needed.

Next, a Variable $PrimaryDirectory is defined and set to MyApplicationData. This is the name of the primary directory we want to create.

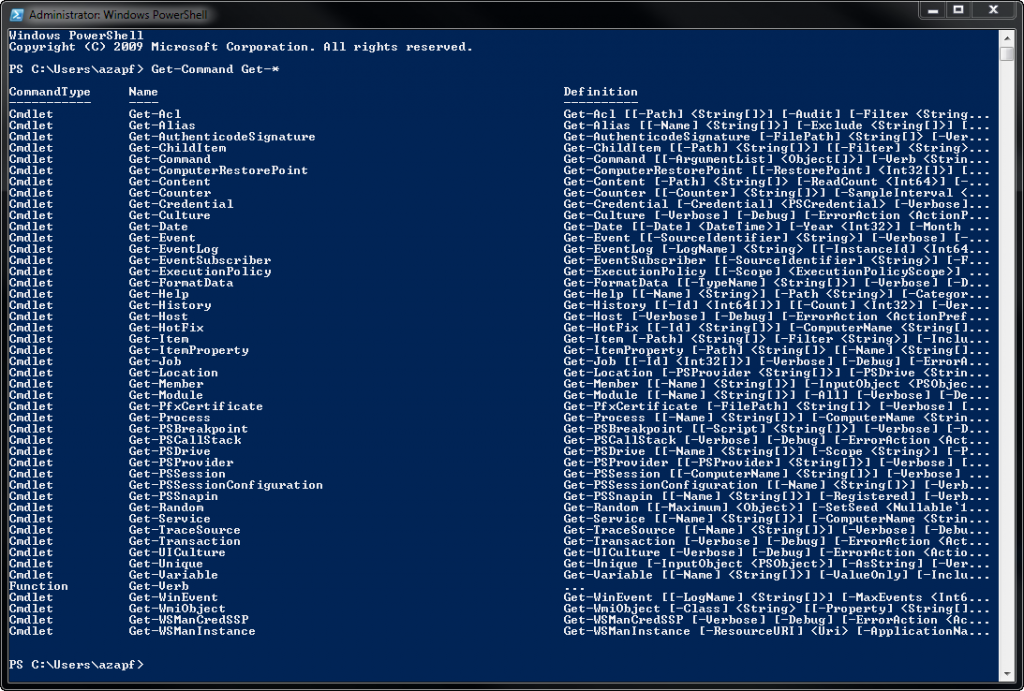

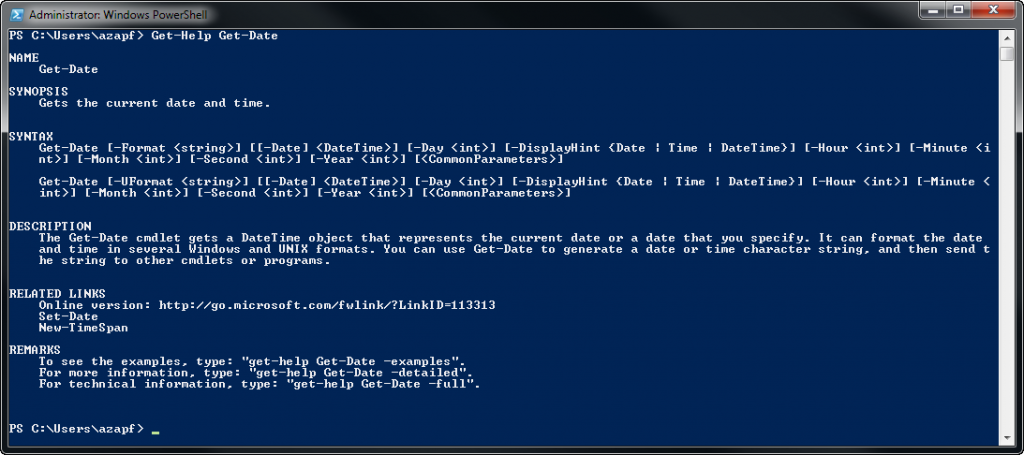

Finally, we are using the New-Item Commandlet to create a directory. If you are looking up the help on New-Item via the Get-Help Cmdlet, you will receive the following information:

The New-Item cmdlet creates a new item and sets its value. The types of items that can be created depend upon the location of the item. For example, in the file system, New-Item is used to create files and folders. In the registry, New-Item creates registry keys and entries.

Fair enough, that is exactly what we need. And New-Item only needs us to provide two parameters: the actual Item we want to create (which in our case is a combination of the variables $TargetDrive and $PrimaryDirectory) and the item-type which for us is directory. And that is it! If you run the script, a new directory will be created in the F: Drive and it will be named MyApplicationData.

Our script has one weakness though: if we run it again, it cannot create the directory because one already exists. In this case, we are receiving an IOException saying the Resource already exists.

Our script has one weakness though: if we run it again, it cannot create the directory because one already exists. In this case, we are receiving an IOException saying the Resource already exists.

# File System Configuration Script for MyApplication

#

# Copyright (C)2011 by Andreas Zapf

#

# Please feel free to re-use and adapt as required :)

# Global Variable Definitions

$TargetDrive = "F:\"

$PrimaryDirectory = "MyApplicationData"

# Test if the primary directory already exists

if( ! (Test-Path -path ($TargetDrive + $PrimaryDirectory)))

{

# Create the primary directory

New-Item ($TargetDrive + $PrimaryDirectory) -type directory

}

else

{

# Notify the user that the directory exists

"The directory " + $TargetDrive + $PrimaryDirectory + " already exists. Creation skipped."

}The code above has been improved to first check if the directory exists and to create it if it does not exist. Otherwise, the system simply provides a message that the directory is already there and the creation has been skipped.

To test if a directory (or other resource) exists, the Test-Path Cmdlet is used. It returns $true if the resource exists. This has been put into the condition of an If…Then…Else construct which evaluates the If-Clause and then executes the following code block if the result is $true or the Else-Block if the result is $false.

Last but not least, our code requires a little bit of code-cleaning: the separation of the actual target drive and the directory name has forced several occasions where the fully qualified directory name has been created “on the fly” using the $TargetDrive + $PrimaryDirectory construct. That not only produces long code, it also causes concern with respect to code maintenance and readability. Better do the following:

# File System Configuration Script for MyApplication

#

# Copyright (C)2011 by Andreas Zapf

#

# Please feel free to re-use and adapt as required :)

# Global Variable Definitions

$TargetDrive = "F:\"

$PrimaryDirectory = $TargetDrive + "MyApplicationData"

# Test if the primary directory already exists

if( ! (Test-Path -path $PrimaryDirectory))

{

# Create the primary directory

New-Item $PrimaryDirectory -type directory

}

else

{

# Notify the user that the directory exists

"The directory " + $PrimaryDirectory + " already exists. Creation skipped."

}Because the primary directory will always be in the same drive and the only reason for the separation was the ability to handle the drive letter in a single location, the variable $PrimaryDirectory can easily be defined using the variable $TargetDrive plus the directory name. As a result, the cumbersome concatenation of values in the remaining code is no longer necessary.

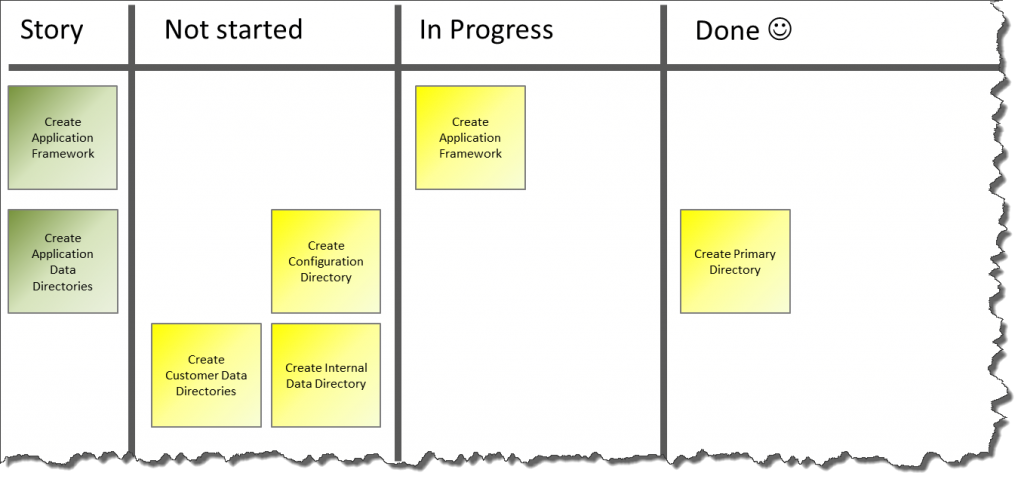

With that, our first task is also done and moved to the Done Column of the Task Board. We are keeping the Create Application Framework task in progress because there may still be some things to be done.

With that, our first task is also done and moved to the Done Column of the Task Board. We are keeping the Create Application Framework task in progress because there may still be some things to be done.

The second Step: Configuration & Internal Data Directories

Looking at our Task Board, we notice the tasks Create Configuration Directory and Create Internal Data Directory. Both are very similar to the finished Create Primary Directory task because they focus on the creation of a single directory in a specific location. So let’s take on those two next:

What do we need to do in our code? Well – maybe not too much! The primary directory has been created. We should now use New-Item to create the two sub-directories within. That means an extension to our Global Variable Definitions, adding one variable for each directory.

What do we need to do in our code? Well – maybe not too much! The primary directory has been created. We should now use New-Item to create the two sub-directories within. That means an extension to our Global Variable Definitions, adding one variable for each directory.

Why am I doing it this way? Well, because we have not been told that there is a need for multiple instances of those directory types (Configuration and Internal Data) and I want the script owner to have an easy identifier in the code telling what directory is referenced in the code:

# Global Variable Definitions $TargetDrive = "F:\" $PrimaryDirectory = $TargetDrive + "MyApplicationData" $ConfigurationDirectory = $PrimaryDirectory + "\ConfigurationData" $InternalDataDirectory = $PrimaryDirectory + "\InternalDataStorage"Next, we need the system to actually create those two directories but only if the parent directory exists!

# File System Configuration Script for MyApplication

#

# Copyright (C)2011 by Andreas Zapf

#

# Please feel free to re-use and adapt as required :)

# Global Variable Definitions

$TargetDrive = "F:\"

$PrimaryDirectory = $TargetDrive + "MyApplicationData"

$ConfigurationDirectory = $PrimaryDirectory + "\ConfigurationData"

$InternalDataDirectory = $PrimaryDirectory + "\InternalDataStorage"

# Test if the primary directory already exists

if( ! (Test-Path -path $PrimaryDirectory))

{

# Create the primary directory

New-Item $PrimaryDirectory -type directory

}

else

{

# Notify the user that the directory exists

"The directory " + $PrimaryDirectory + " already exists. Creation skipped."

}

If( Test-Path -path $PrimaryDirectory )

{

# Create Configuration Directory

New-Item $ConfigurationDirectory -type directory

# Create Internal Data Directory

New-Item $InternalDataDirectory -type directory

}

else

{

"Failed to create Configuration Directory and Internal Data Directory because " + $PrimaryDirectory + "does not exist."

} The following assumption is made in the code (for simplicity): if the primary directory does not exist, it is created. If the primary directory exists thereafter, the two sub-directories are created but we do not again check if they already exist. In this situation, we might see a change request later:

“As an Administrator, I want the script to stop execution of the Primary Directory exists to avoid configuration confusion.”

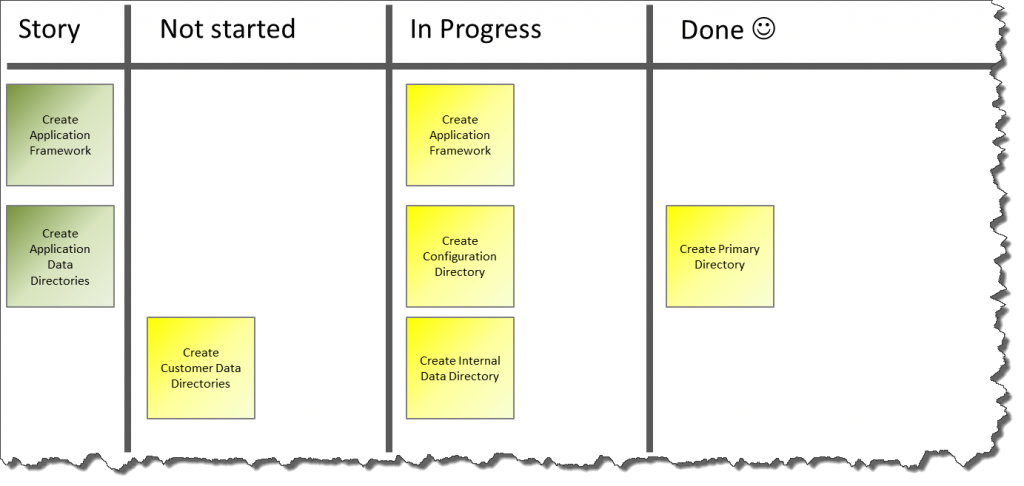

That would probably be a wise requirement – for now, it is not part of our little script! Let’s check the Task Board: two more tasks are done for the moment.

The third Step: the Custom Data Directories

The third Step: the Custom Data Directories

The last step we are going to work on are the Custom Data Directories. In principle, the creation of these is in no way different than the creation of the previous directories. But we do not know their number! There may be one or many Custom Data Directories so there is no way to hard-code them the way we did it before.

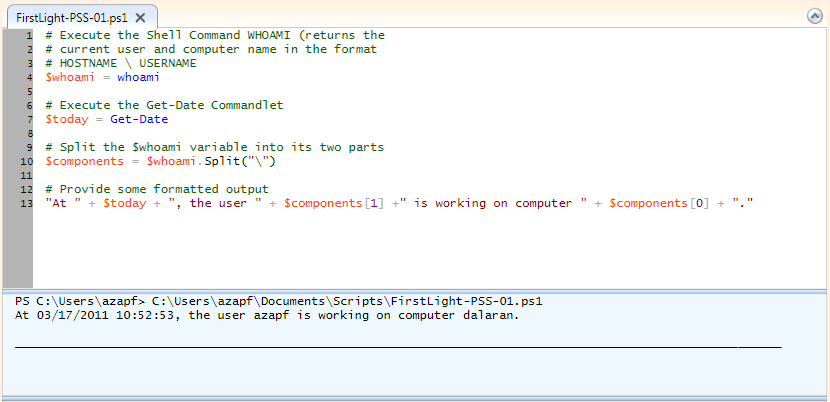

Instead, we are going to use an Array of Strings to define the Custom Data Directories and then use a ForEach loop to process them. The definition of the Array of Strings is straight forward:

$CustomDatDirectory = "CustomDataStorage1","CustomDataStorage2"Simply list all members of the array separated by a comma.

foreach ($myCustomDataDirectory in $CustomDataDirectories)

{

$myCustomDataDirectory = $PrimaryDirectory + "\" + $myCustomDataDirectory

New-Item $myCustomDataDirectory -type directory

}The processing using the ForEach loop is equally simple: foreach (<item> in <collection>) tells the command processor to load an element from <collection> into the variable <item> and process it. Then load the next element into <item> until no unprocessed elements remain in <collection>.

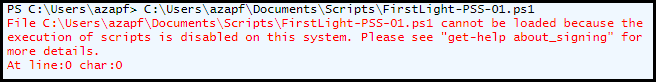

For reference, I am including the complete script here. Please keep in mind that if you download it from the Internet, you need to allow the execution of unsigned scripts or you need to create your own script from the code!

Wrapping it up

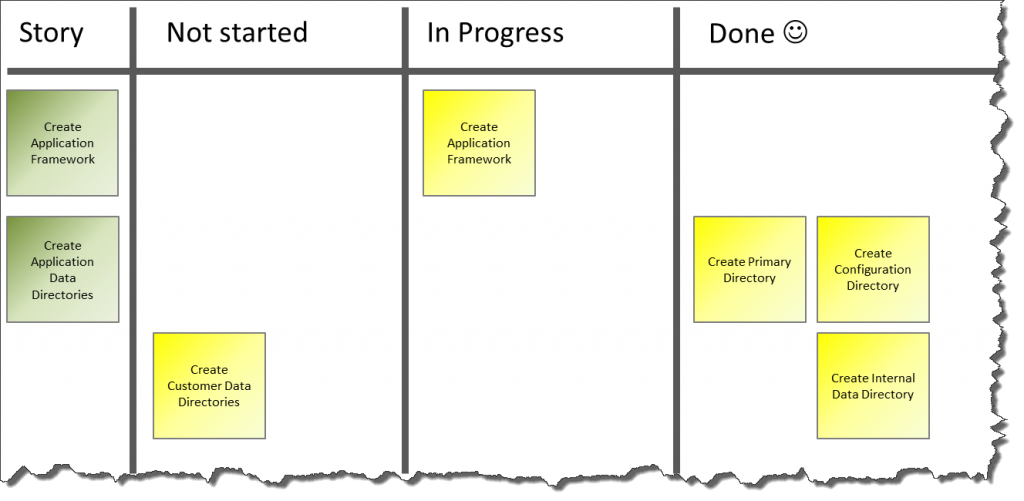

We can wrap up now – we have achieved quite a few things today. But first things first, we need to update our Task Board:

All tasks are finished and done, so what have we gotten ourselves?

All tasks are finished and done, so what have we gotten ourselves?

- We have a script that is capable of creating the required directory structure we asked it to provide.

- We have a new Requirement about the script stopping when the directory already exists (but we have not implemented this in the script!)

- We have learned about the New-Item Commandlet as well as the Test-Path Commandlet.

- We have learned about the If…Then…Else… construct and the ForEach Loop.

- We have used a Task Board to track our progress.